Since the latest version of ChatGPT was released a few weeks ago, there has been some level of concern among writers that we may be the first to be replaced by AI.

Whether that concern is justified or not is beyond the scope of this article, suffice to say that we can now add 3D modelers to the list of those who may be concerned for their livelihoods, as OpenAI has just released a similar solution that converts text into 3D models.

The code has been released on GitHub, and a paper titled “Point·E: A System for Generating 3D Point Clouds from Complex Prompts” has been uploaded to accompany it.

Text-to-3D

While it is not the first text-to-3D program out there, it’s likely to be the one that generates the most hype over the next few weeks, as it’s not in closed beta like Imagine 3D from Luma AI, released just a couple of weeks ago.

All you need to use OpenAI’s “Point-E” is some basic Python skills and you too can be generating 3D models from your textual inputs.

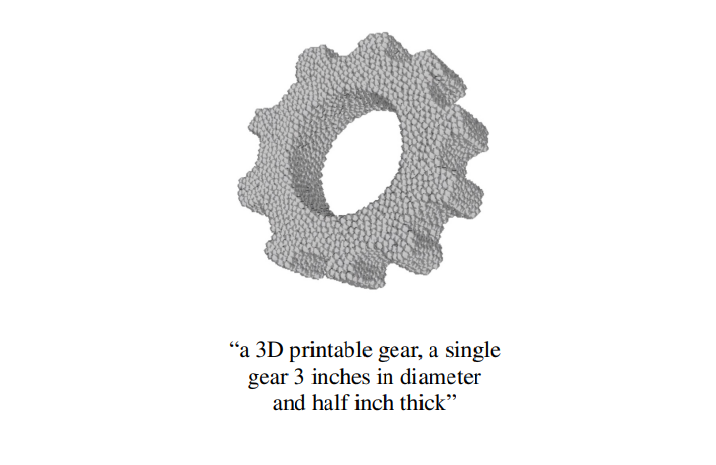

Point-E is named such because it generates point clouds rather than tessellated or surfaced models. By generating point clouds, Point-E can generate models much faster than other AI methods of text-to-3D, and with a fraction of the compute power required. Point-E can generate a 3D model from your words in just a couple of minutes on a single GPU.

Yes, the models are a little coarse, because they are fairly low resolution point clouds. But when combined with the OpenAI point cloud-to-mesh model, a tessellated surface can be mapped from the points.

You can see the result of the mesh below.

We are a 3D printing website, so you’re probably wondering how long before we can 3D print things using the power of words alone.

There were examples from folks using Imagine 3D a little while back who had successfully printed models using the Luma AI option.

And in the Point-E paper, there was an example of a gear point cloud modeled with the prompt seen in the image below.

Text-to-Print?

It is totally possible to create a text-to-3D print workflow, although you can’t print a point cloud, so if you wish to use Point-E, you will have to convert it into a proper 3D model for printing first. You could probably do that with the Solidworks ScanTo3D function (or similar), which is designed specifically for converting point clouds from scanned data into models for reverse engineering purposes.

Just as Dall-E, Stable Diffusion, and ChatGPT have all improved in terrifying leaps and bounds, it’s only a matter of time before the same happens with text-to-3D, and ultimately, with text-to-AM.

Will it make our jobs easier or will it take our jobs altogether?

Probably a mix of both, tbh. Only time will tell. But as with all industrial revolutions, as old skills are made obsolete, new ones pop up to replace them.

If you would like to play around with the Point-E model then you can download the source over at Github, at this link.

Alternatively, if you’d just like to read the published paper from OpenAI, you can find that over here.